Power of AI for Everyone

There is Nothing wrong with AI,

there is Everything wrong with concentration of AI.

Current AI Problems

Deep Learning based Artificial Intelligence requires massive amount of data and compute power, fitting perfectly into the existing Cloud Computing model, substantially worsening the already massive Digital Inequality caused by Cloud Platforms.

1.1. AI deciding who to Kill

Artificial Intelligence (AI) is starting to be used to pick targets instead of just destroying targets. This is no longer about AI killing humans, this is about AI deciding who to kill.

That is AI is being used across the full spectrum: as the police, the jury, the judge and the executioner.

As Microsoft gave ChatGPT a boost in funding it fired all AI ethics staff, the opposite of what is needed to responsible use of AI.

Your Private Cyberspace's AI capabilities are fully controlled by you for more private and hopefully less violent endeavours!

1.2. Not just Coffees at Cafes

How many people at the BrainWash Cafe in San Francisco know their faces were used to help develop AI technologies for foreign militaries ?

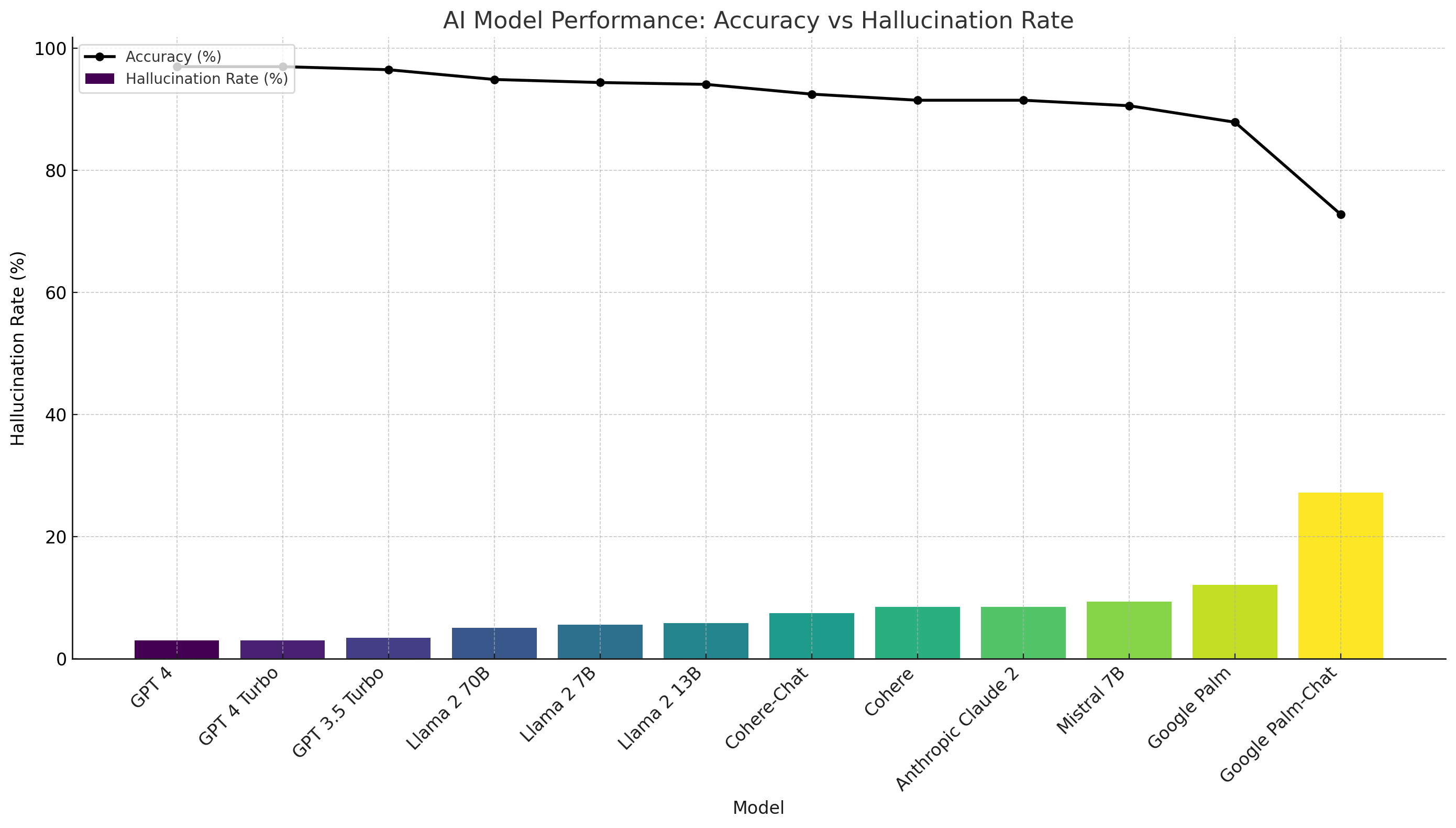

1.3. Hallucination

source: prompt engineering

Partition AI Solution

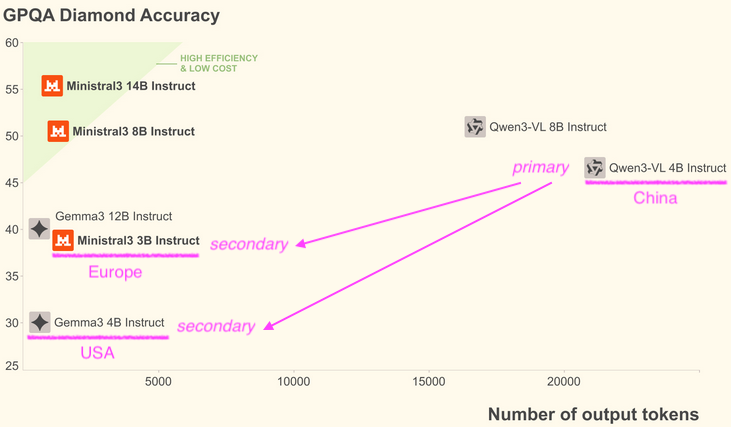

Personalised Artificial Intelligence means higher accuracy and ultimate in privacy.

Your Agent is like your own AI personal helper to operate your cyberspace on your behalf. Training your agent like understanding your speech are done on YOUR device for your benefit instead of the cloud's benefit.

As if using your data in influencing your behaviour is not bad enough, now leaking your data could potentially cause you substantial harm.

Current AI developments are mainly based on machine learning, you need to feed it with your data FIRST, protecting your data is more important than ever before.

No matter how advanced an adversary's machine learning capabilities, it still need to get at your data - data that you can start protecting - NOW.

2.1. AI

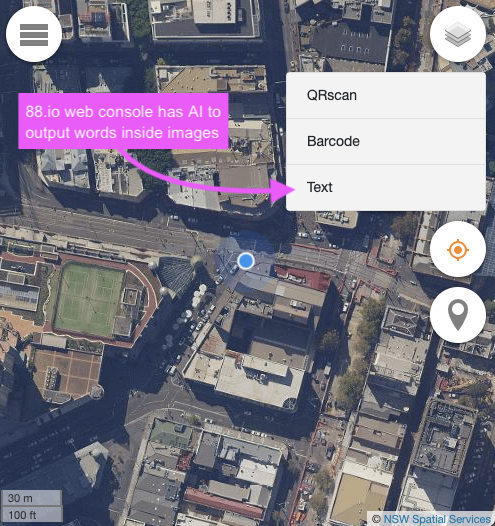

88.io is about providing AI capability to the masses, so everyone can decide HOW AI impact their individual lives, instead of just been impacted upon en masse BY AI.

You can experience some of 88.io's AI capability with just ONE CLICK.

It can be used to scan most images - the your weight on your bathroom scale, the number plate of the bus you are about to board, receipt for a meal you just had etc.

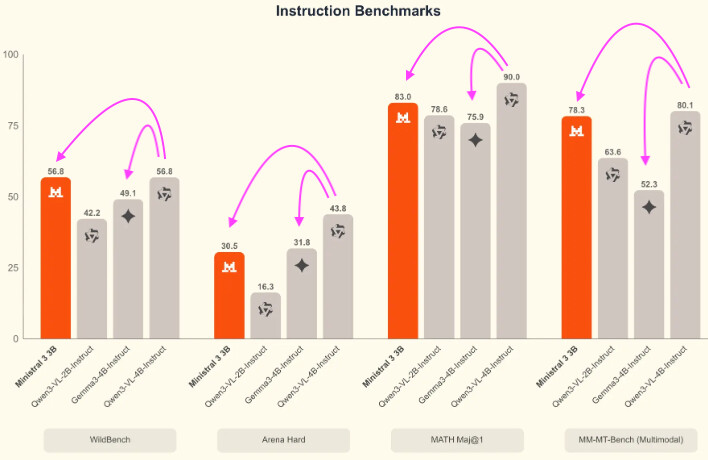

AI Ownership

As machine learning acceleration of common AI software is made available on more commodity hardware (e.g. Tensorflow inference used to be accelerated only by Google's own Coral hardware, but now we can use OpenVINO on Intel hardware and TensorRT on NVidia hardware), everyday folks are increasing able perform AI operations that traditionally were only feasible in the Cloud on their own hardware.