Kubernetes

Kubernetes (K8s) was designed by Google for Google style computing, not many organisation running K8s has the same applications and resources as Google.

Even for those organisations with thousands of containers, orchestrating them through a single control plane is a big reliability and security hole. The bigger the organisation the more need for compartmentalisation with many control planes, so one update or one hack or mistake is not going to bring all of them down.

For most docker based applications there is NO need for the difficult migration and complex operation of K8s, however for those with existing K8s applications, it is actually possible to run K8s inside a Disposable Node to take advantage of advanced Community Cluster features like Dynamic Alias, Infinite Disk etc.

Besides K8s, it is also possible to run other orchestration clusters (e.g. Docker Swarm, HashiCorp Nomad etc.) inside Disposable Nodes.

MicroK8s

While installing full K8s inside Disposable Nodes is supported, but light weight K8s distributions (e.g microK8s, K3s etc.) fit Citizen Synergy's distributed control paradigm better.

The default K8s distribution that can run inside LXC is MicroK8s. It removes the need for to implement high availability (HA) at the LXC level and instead implements HA inside the LXC itself.

The following video introduces MicroK8s running inside LXD:

Default MicroK8s configuration:

Community Cluster vs Kubernetes

Community Cluster is design for the home while Kubernetes is design for the data centre, although both the Community Cluster and Kubernetes can be used to manage Application Containers (e.g. docker) they are very different.

| Features |

Community Cluster |

Kubernetes |

| Compute Module |

Any Commands, Packages, Containers, Machines |

Special Containers |

| Compute Set |

Node |

Pod |

| Compute Host |

Station with Nodes |

Node with Pods |

| Compute Location |

Neighbourhood, Data Centres |

Data Centres |

| Replica |

Active, Inactive |

Active |

| Orchestrator |

Many |

One |

| Storage |

Infinite Disk |

Container Storage Interface |

| Network |

Virtual Private Mesh |

Container Network Interface |

Note: A Compute Set groups Modules together so they can share the same networking and storage.

Replica Sets

Both run many replicas of the same application container in order to scale up application reliability and performance across multiple machines.

Container Groups

Disposable Nodes inside a Community Cluster can be viewed loosely as Pods in Kubernetes, enabling application containers running inside to share common networking and storage.

2. Differences

Orchestrator

Kubernetes has ONE orchestrator running on multiple Nodes, centrally controlling processing data given to it by information owners through centralised control of the application and infrastructure.

Community Cluster has MANY information owners processing their own data by controlling the application and infrastructure independently.

As each owner only needs to manage a small part of Community Cluster that it uses, the complexity is substantially reduced.

Complete Management

Kubernetes does not handle much outside of containers (computing abstraction). Fiduciary Exchange covers everything with Disposable Node abstraction (from software to hardware, from support personnel to computer rooms).

All Nodes whether, physical or virtual, follow the same Modular Assist management framework.

Any Site

Kubernetes is designed to be ran in a few secured and stable data centres with high quality networking. Fiduciary Exchange is designed to run across the world in almost anywhere with common internet access.

Universal Storage

Kubernetes has numerous volume types and provisioning methods.

Fiduciary Exchange only has one type (Infinite Disk) that looks and performs like a local disk to support any applications (including emails, documents, videos, databases, search engines etc.)

Bidirectional Network

Kubernetes networking focuses on handling incoming traffic to services provided by the pod (e.g. kube-proxy).

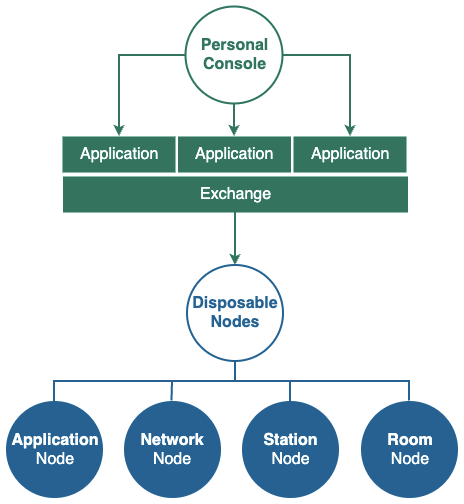

Fiduciary Exchange has Network Nodes controlling both incoming and outgoing traffic from Application Nodes, Station Nodes and Room Nodes.